Paper

Human Mesh Recovery from Multiple Shots

Georgios Pavlakos, Jitendra Malik, Angjoo Kanazawa

CVPR 2022

[pdf] [bibtex] [Code and Data]Human Mesh Recovery from Multiple Shots

CVPR 2022Videos from edited media like movies are a useful, yet under-explored source of information. The rich variety of appearance and interactions between humans depicted over a large temporal context in these films could be a valuable source of data. However, the richness of data comes at the expense of fundamental challenges such as abrupt shot changes and close up shots of actors with heavy truncation, which limits the applicability of existing human 3D understanding methods. In this paper, we address these limitations with an insight that while shot changes of the same scene incur a discontinuity between frames, the 3D structure of the scene still changes smoothly. This allows us to handle frames before and after the shot change as multi-view signal that provide strong cues to recover the 3D state of the actors. We propose a multi-shot optimization framework, which leads to improved 3D reconstruction and mining of long sequences with pseudo ground truth 3D human mesh. We show that the resulting data is beneficial in the training of various human mesh recovery models: for single image, we achieve improved robustness; for video we propose a pure transformer-based temporal encoder, which can naturally handle missing observations due to shot changes in the input frames. We demonstrate the importance of the insight and proposed models through extensive experiments. The tools we develop open the door to processing and analyzing in 3D content from a large library of edited media, which could be helpful for many downstream applications.

Paper

Human Mesh Recovery from Multiple Shots

Georgios Pavlakos, Jitendra Malik, Angjoo Kanazawa

CVPR 2022

[pdf] [bibtex] [Code and Data]Bibtex

Video

Overview

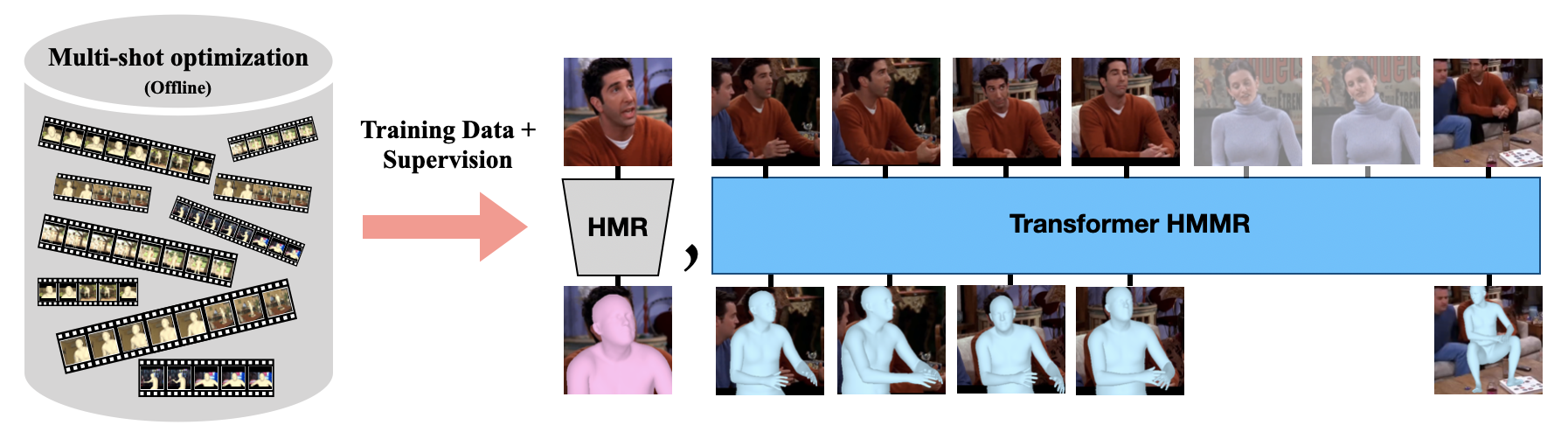

We propose a multi-shot optimization method that allows us to reconstruct humans across shot changes. We apply this optimization step offline to build a large dataset of pseudo ground truth 3D human sequences from movies. Then, we use the collected data, to train computational models that can directly recover the human mesh from a single image, or from a video sequence that can include shot changes.

Samples from Multi-Shot AVA

Comparison

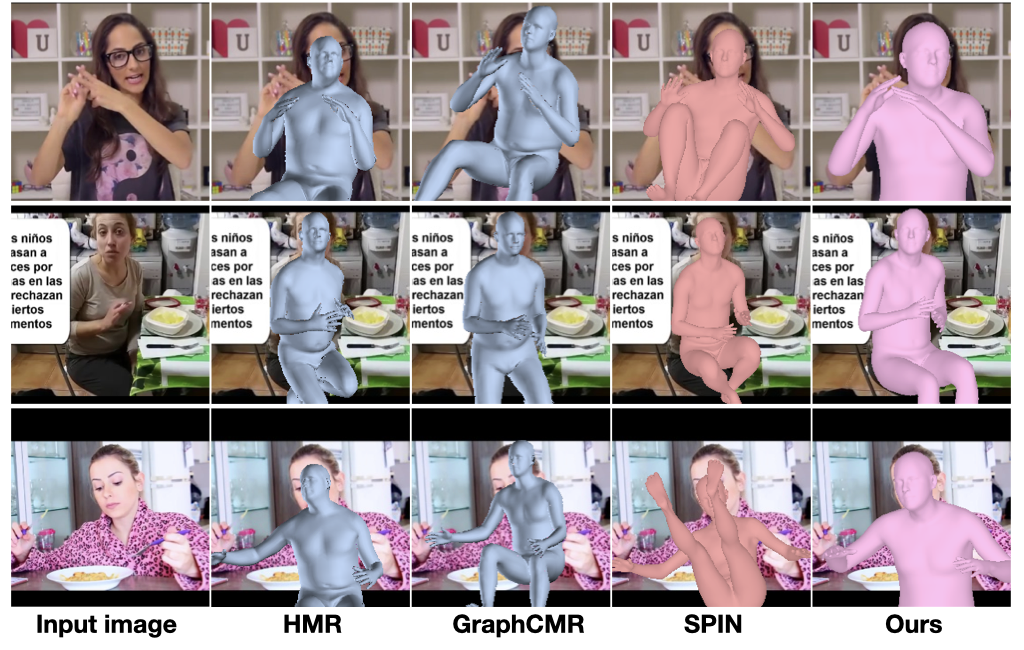

Many previous approaches for 3D human reconstruction were designed primarily with performance capture in mind, so they can often fail on data from edited media. Our methods are robust and successful in these scenarios as well.

Acknowledgements

This research was supported by BAIR sponsors.

We want to thank Stephen Phillips for the video voiceover. GP wants to thank Nikos Kolotouros for helpful discussions.

The design of this project page was based on this website.