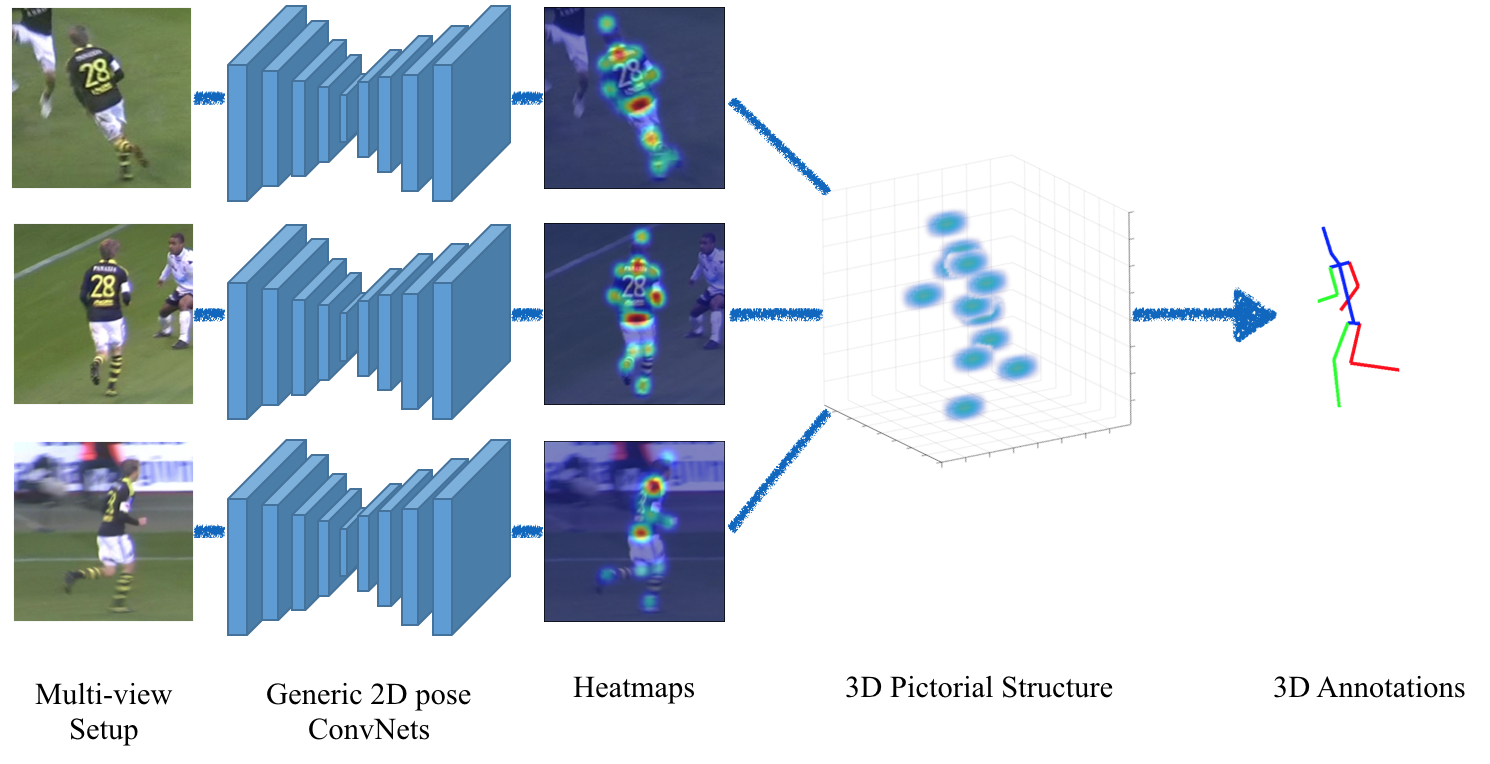

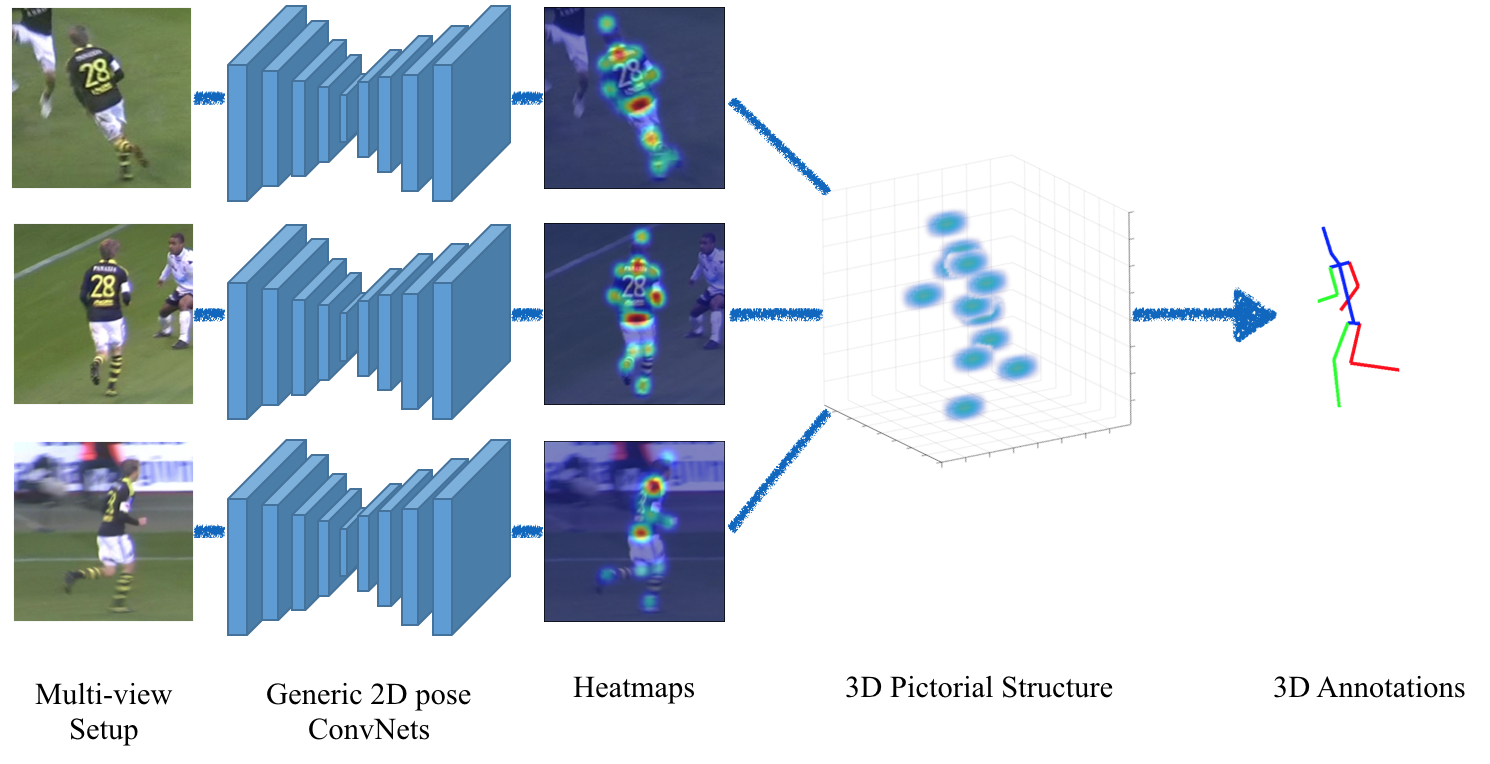

Recent advances with Convolutional Networks (ConvNets) have shifted the bottleneck for many computer vision tasks to annotated data collection. In this paper, we present a geometry-driven approach to automatically collect annotations for human pose prediction tasks. Starting from a generic ConvNet for 2D human pose, and assuming a multi-view setup, we describe an automatic way to collect accurate 3D human pose annotations. We capitalize on constraints offered by the 3D geometry of the camera setup and the 3D structure of the human body to probabilistically combine per view 2D ConvNet predictions into a globally optimal 3D pose. This 3D pose is used as the basis for harvesting annotations. The benefit of the annotations produced automatically with our approach is demonstrated in two challenging settings: (i) fine-tuning a generic ConvNet-based 2D pose predictor to capture the discriminative aspects of a subject's appearance (i.e.,"personalization"), and (ii) training a ConvNet from scratch for single view 3D human pose prediction without leveraging 3D pose groundtruth. The proposed multi-view pose estimator achieves state-of-the-art results on standard benchmarks, demonstrating the effectiveness of our method in exploiting the available multi-view information.

Georgios Pavlakos,

Xiaowei Zhou,

Konstantinos G. Derpanis,

Kostas Daniilidis

Computer Vision and Pattern Recognition (CVPR), 2017 (Spotlight Presentation)

project page

/

supplementary

/

video

/

code

/

bibtex

@inproceedings{pavlakos2017harvesting,

Author = {Pavlakos, Georgios and Zhou, Xiaowei and Derpanis, Konstantinos G and Daniilidis, Kostas},

Title = {Harvesting Multiple Views for Marker-less 3D Human Pose Annotations},

Booktitle = {CVPR},

Year = {2017}}

For any questions regarding this work, please contact the corresponding author at pavlakos@seas.upenn.edu